Python is everywhere. Data science, web backends, automation scripts, machine learning pipelines—the language dominates modern software development. But here's Python's dirty secret that every developer knows: it's slow. Really slow compared to compiled languages. We tolerate this slowness because Python's productivity benefits outweigh performance costs for most applications. But when performance matters—processing large datasets, handling high-traffic APIs, running complex models—suddenly that slowness becomes painful. When I discovered Codeflash, a tool claiming to automatically optimize Python code up to 10x faster using AI, I was simultaneously excited and skeptical. Can AI really understand code well enough to optimize it automatically? Let's dig in.

The Creative Vision: AI as Your Senior Developer Pair

From a creative standpoint, Codeflash represents something genuinely ambitious: using AI not just to write code, but to improve code that already exists. That's a subtly different and arguably harder problem.

The creative insight here is recognizing that code optimization is a knowledge transfer problem. Senior developers know optimization tricks—using list comprehensions instead of loops, leveraging built-in functions, avoiding repeated computations, choosing appropriate data structures. Junior developers often write functional but inefficient code because they haven't accumulated this knowledge. Codeflash attempts to democratize senior-level optimization knowledge through AI, making expert-level performance improvements accessible to everyone.

What I find creatively interesting is the verification aspect mentioned in the product description. The tool doesn't just blindly transform code—it uses "advanced AI technology and verification methods" to find optimal versions. This suggests awareness that code optimization isn't just about making things faster; it's about ensuring the optimized code still produces correct results. That's sophisticated thinking about a genuinely hard problem.

The integration strategy shows creative product thinking too. By embedding into GitHub Actions and VS Code—tools developers already use daily—Codeflash becomes invisible infrastructure rather than another app demanding attention. Code optimization happens automatically during normal development workflow. That's creative friction reduction.

The "optimize all new code" positioning is creatively ambitious. Rather than being a tool you occasionally use when you notice performance problems, Codeflash positions itself as continuous optimization that prevents performance issues from entering your codebase in the first place. It's shifting from reactive optimization to proactive optimization. That mental model shift is creative.

However, the creative vision has potential overreach. Claiming "up to 10x faster" sets extremely high expectations. While technically true for specific cases—a poorly written nested loop might indeed improve dramatically—average improvements are likely much more modest. The marketing creativity might create expectation gaps that damage trust.

The "any Python code" claim is creatively bold but potentially problematic. Python applications vary wildly—web frameworks, data processing, scientific computing, automation scripts. Each domain has different optimization patterns. Claiming universal optimization capability requires either incredibly sophisticated AI or some qualification that isn't immediately apparent.

Disruption Analysis: Challenging Human Optimization Expertise

Let's examine whether Codeflash can disrupt existing Python optimization approaches.

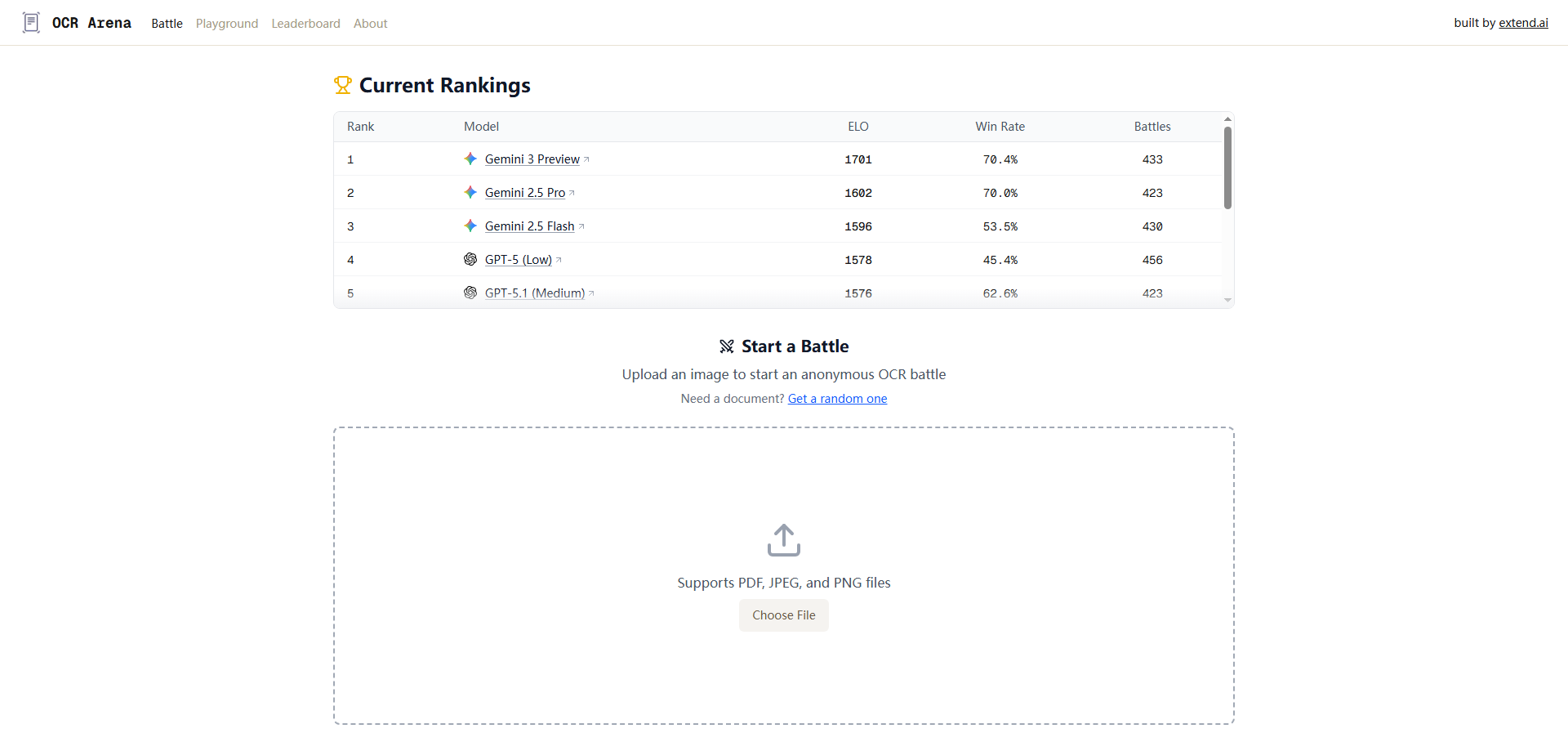

Currently, Python performance optimization happens through several methods. Manual optimization by experienced developers who recognize inefficient patterns. Code profilers like cProfile, line_profiler, or py-spy that identify bottlenecks. Static analysis tools like pylint or mypy that catch some inefficiencies. Performance-focused libraries like NumPy, Cython, or PyPy that provide faster execution paths. And increasingly, general-purpose AI coding assistants like GitHub Copilot or ChatGPT that can suggest optimizations when asked.

Can Codeflash disrupt these approaches? Let me analyze each.

For manual expert optimization, Codeflash has genuine disruptive potential. Hiring senior developers with performance optimization expertise is expensive. Having an AI that automatically applies their knowledge patterns is economically compelling. For startups and teams without performance specialists, Codeflash could provide expertise they couldn't otherwise afford.

For code profilers, the disruption is partial. Profilers tell you where code is slow; they don't fix it. Codeflash apparently both identifies and fixes problems. That's more comprehensive. However, profilers provide understanding—you learn why code is slow, which builds knowledge. Codeflash's automation might optimize without teaching, which has different value.

For static analysis tools, Codeflash offers complementary value rather than replacement. Static analyzers catch bugs, type issues, and style problems beyond just performance. Codeflash focuses specifically on optimization.

For performance libraries like NumPy, no disruption is realistic. These libraries use compiled C code underneath Python, providing performance gains Codeflash can't replicate through code transformation alone. Different optimization layer entirely.

For general AI coding assistants, Codeflash's specialization is disruptive. ChatGPT can suggest optimizations when you ask specific questions, but it requires you to identify problems first and ask correctly. Codeflash's automatic analysis and optimization is more proactive and specialized. That focus could deliver superior results for this specific use case.

Where I see most disruption potential is in CI/CD pipeline integration. Currently, code review catches some performance issues, but reviewers are inconsistent and busy. Having automated optimization in the deployment pipeline ensures consistent performance standards without human bottleneck. That's potentially transformative for team workflows.

For educational contexts, Codeflash could disrupt learning optimization skills. Students could see optimized versions of their code and learn patterns. But this is double-edged—learning without understanding might not build lasting skills.

My disruption verdict: Codeflash can disrupt manual optimization workflows and complement existing tooling, but won't replace profilers, performance libraries, or comprehensive code understanding. Specialized disruption rather than complete replacement.

User Acceptance: Who Actually Needs Automatic Python Optimization?

This is where things get really interesting. Python's performance problems are well-known, but do users actually want AI-automated solutions?

Let me map out user segments and their likely acceptance.

Data scientists and machine learning engineers represent a strong acceptance case. These users write Python code that processes massive datasets or runs complex algorithms. Performance directly impacts their productivity—waiting hours for data processing versus minutes changes how they work. They're also not typically performance optimization specialists; their expertise is in algorithms and domain knowledge. Codeflash addressing their Python inefficiencies without requiring them to become optimization experts aligns perfectly with their needs.

Web developers building Python backends have clear performance needs too. API response times directly impact user experience. However, web performance involves many layers—database queries, caching, networking—not just Python code efficiency. Codeflash helps with one piece but not the complete picture. Acceptance is positive but users might expect more comprehensive solutions.

DevOps and platform engineers managing Python infrastructure could embrace this enthusiastically. Automatic optimization integrated into CI/CD aligns with their automation philosophy. Ensuring all deployed code meets performance standards without manual review is valuable. Strong acceptance likely.

Python learners represent interesting acceptance dynamics. Students could use Codeflash's VS Code integration to learn optimization patterns by seeing their code transformed. However, learning requires understanding, not just seeing results. If Codeflash explains why optimizations work, educational acceptance increases. If it just shows "before and after," learning value diminishes.

Enterprise development teams have compelling needs but complex acceptance factors. Performance matters for production systems. But enterprise environments have strict code review processes, security concerns about third-party tools, and resistance to "black box" changes. Even if optimization value is clear, organizational acceptance barriers exist.

However, significant acceptance challenges exist. First, trust in AI-generated code modifications is limited. Developers are naturally cautious about automatic code changes. Even if Codeflash includes verification, trusting that automated optimization preserves correctness requires evidence and experience.

Second, the "up to 10x" claim might backfire. Users expecting 10x improvements on every piece of code will be disappointed. Realistic expectations setting is crucial for acceptance.

Third, Python developers often choose Python specifically because they prioritize development speed over execution speed. For them, adding optimization tooling might feel unnecessary—if performance mattered that much, they'd use a faster language.

Fourth, cost sensitivity matters. The product hunt page doesn't mention pricing, but enterprise AI tools are typically expensive. Budget-constrained teams might not justify costs for "nice to have" optimization.

Fifth, transparency concerns are real. Developers want to understand their code. If Codeflash produces optimizations they don't understand, it might feel uncomfortable even if code runs faster.

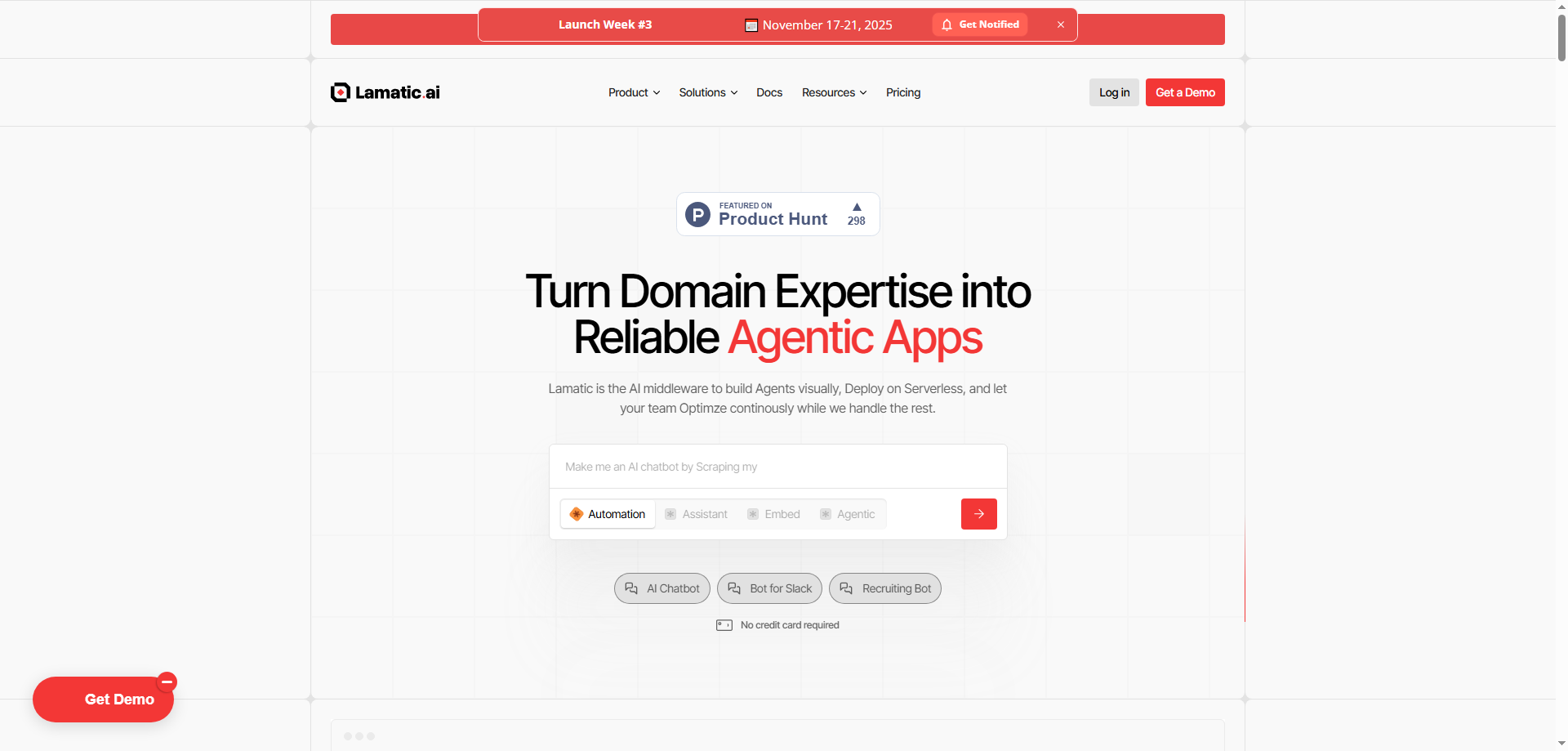

The 61 votes and 17 discussions on Product Hunt show moderate interest. Notably, the discussion count relative to votes is high, suggesting engaged curiosity rather than casual interest. People have questions, which indicates genuine consideration rather than superficial browsing.

My user acceptance assessment: Strong acceptance among performance-sensitive Python users—data scientists, ML engineers, backend developers with scale challenges. Weaker acceptance among general Python developers, enterprise environments with strict policies, and developers who prioritize understanding over automation.

Survival Rating: 3.5 out of 5 Stars

After thorough analysis, I'm rating Codeflash's one-year survival prospects at 3.5 out of 5 stars. Here's my complete reasoning.

Opportunities for Growth

The Python ecosystem's scale provides enormous market. Python is among the most popular programming languages globally. Even capturing small percentage of Python developers means substantial user base.

Performance optimization is evergreen need. As data grows and applications scale, performance matters increasingly. This trend favors Codeflash's value proposition.

The AI coding tools market is exploding. Investment in AI-assisted development is massive. Codeflash riding this wave benefits from general market momentum and investor interest.

The CI/CD integration approach is strategically sound. Embedding into existing workflows reduces adoption friction. Developers don't need to change habits significantly.

Enterprise customers with Python performance challenges represent potentially lucrative market. Financial services, e-commerce, and data companies have both Python dependency and performance requirements.

The educational angle provides growth avenue. Partnering with coding bootcamps or universities could drive adoption among emerging developers.

Risks to Consider

The verification and correctness guarantee is critical vulnerability. One instance where Codeflash's optimization introduces bugs could devastate trust. Code correctness is non-negotiable, and automated transformation carries inherent risks.

Competition from comprehensive AI coding tools intensifies. GitHub Copilot, Amazon CodeWhisperer, and other generalist AI coding assistants could add optimization features, bundling capability that Codeflash offers standalone.

The "up to 10x" marketing claim creates expectations gap risk. When users see modest improvements on their specific code, disappointment could drive negative reviews and churn.

Pricing model uncertainty matters. If enterprise-focused pricing excludes individual developers and small teams, growth limits. If too cheap, revenue might not sustain AI compute costs.

Python-only focus narrows market compared to language-agnostic tools. Developers using multiple languages might prefer single comprehensive tool over Python-specific solution.

AI model training and maintenance requires ongoing investment. Keeping optimization patterns current with Python evolution demands continuous development resources.

Security and privacy concerns around code submission could limit enterprise adoption. Companies might resist sending proprietary code to external services.

The technical challenge of universal Python optimization is genuinely hard. Different Python application types—web frameworks, data processing, numerical computing—have vastly different optimization patterns. Delivering consistently high-quality optimizations across all use cases is ambitious.

My Final Thoughts

Codeflash addresses real problem with sophisticated approach. Python's performance limitations frustrate developers regularly, and automatic optimization through AI represents forward-thinking solution. The integration strategy is smart, the specialization provides focus, and the performance need is genuine.

However, the ambitious claims require exceptional execution. "Up to 10x faster" and "any Python code" create expectations that demand consistently excellent results. Trust in automated code modification requires proving both speed improvements and absolute correctness—a high bar.

The 3.5-star rating reflects my belief that Codeflash has strong niche value and can survive serving performance-sensitive Python developers who trust its optimization quality. But becoming widely adopted requires overcoming trust barriers, proving consistent value, and navigating competition from general AI coding assistants.

For Python developers frustrated by performance issues, Codeflash is worth investigating. Start with non-critical code to evaluate optimization quality and build trust. Integrate gradually into workflows rather than immediately applying to production systems.

The vision of AI that automatically optimizes code represents exciting future for software development. Whether Codeflash specifically fulfills that vision depends on execution quality we'll only understand through real-world usage. The concept is sound, the market need is real, and the approach is creative. Success hinges on delivering promised results consistently without compromising code correctness.

Python may be slow, but our tolerance for that slowness is shrinking. Tools that bridge the gap between Python's productivity and performance needs will find audience. Codeflash is betting it can be that bridge through AI. That's a worthwhile bet, even if the outcome remains uncertain.