What is DeepMind Gemini Robotics?

DeepMind Gemini Robotics represents Google DeepMind’s most ambitious step toward embodied AI—the fusion of advanced reasoning and robotic execution. It consists of two interconnected models: Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, which function together like a mind and a body.

- Gemini Robotics-ER 1.5 serves as the brain—a reasoning and planning system based on visual-language models (VLMs). It interprets vague human instructions, searches the web for relevant context, and breaks complex goals into executable steps.

- Gemini Robotics 1.5 acts as the body—a vision-language-action (VLA) system that translates those steps into precise motor movements, coordinating robotic limbs and sensors to carry out real-world actions.

Together, they form a two-part cognitive-physical framework that allows robots to “think before acting.”

How the Gemini Robotics System Works

1. The Brain: Gemini Robotics-ER 1.5

Gemini Robotics-ER 1.5 is designed to understand and reason about ambiguous natural language commands.

Give it a prompt like “Clean up the kitchen”, and it autonomously decomposes the task into sub-actions:

- Clear the table

- Load the dishwasher

- Wipe the countertop

If more context is needed, it searches the web in real time. For instance, it might look up local recycling rules or today’s weather forecast to determine whether to sort waste differently or pack a raincoat.

What makes ER 1.5 unique is its adjustable “thinking budget.” Users can control how much computational effort it devotes to reasoning—more tokens mean deeper, slower reasoning; fewer tokens make it faster but less analytical. This feature mirrors human decision-making tradeoffs between speed and accuracy.

In essence, Gemini Robotics-ER 1.5 is like having an AI project manager that plans, searches, and reasons before execution.

2. The Body: Gemini Robotics 1.5

Gemini Robotics 1.5 transforms those plans into real-world actions. It interprets multimodal input—visual data, spoken commands, and textual instructions—to guide robotic limbs, grippers, or humanoid forms with precision.

Its standout capability is “cross-embodiment learning.” Skills learned on one robot (like a robotic arm) can be transferred to another robot (like a humanoid) without retraining. This drastically reduces data collection costs and training time, a major bottleneck in robotics.

For example, a manipulation task learned on the ALOHA2 robotic platform can be seamlessly transferred to the Franka dual-arm system or even the humanoid Apollo robot. This means knowledge is shared across machines, just like humans teaching each other.

Moreover, Gemini Robotics 1.5 adds “transparent reasoning before motion.” It verbalizes its intended actions before performing them—offering interpretability and safety in high-stakes environments.

Benchmarks and Achievements

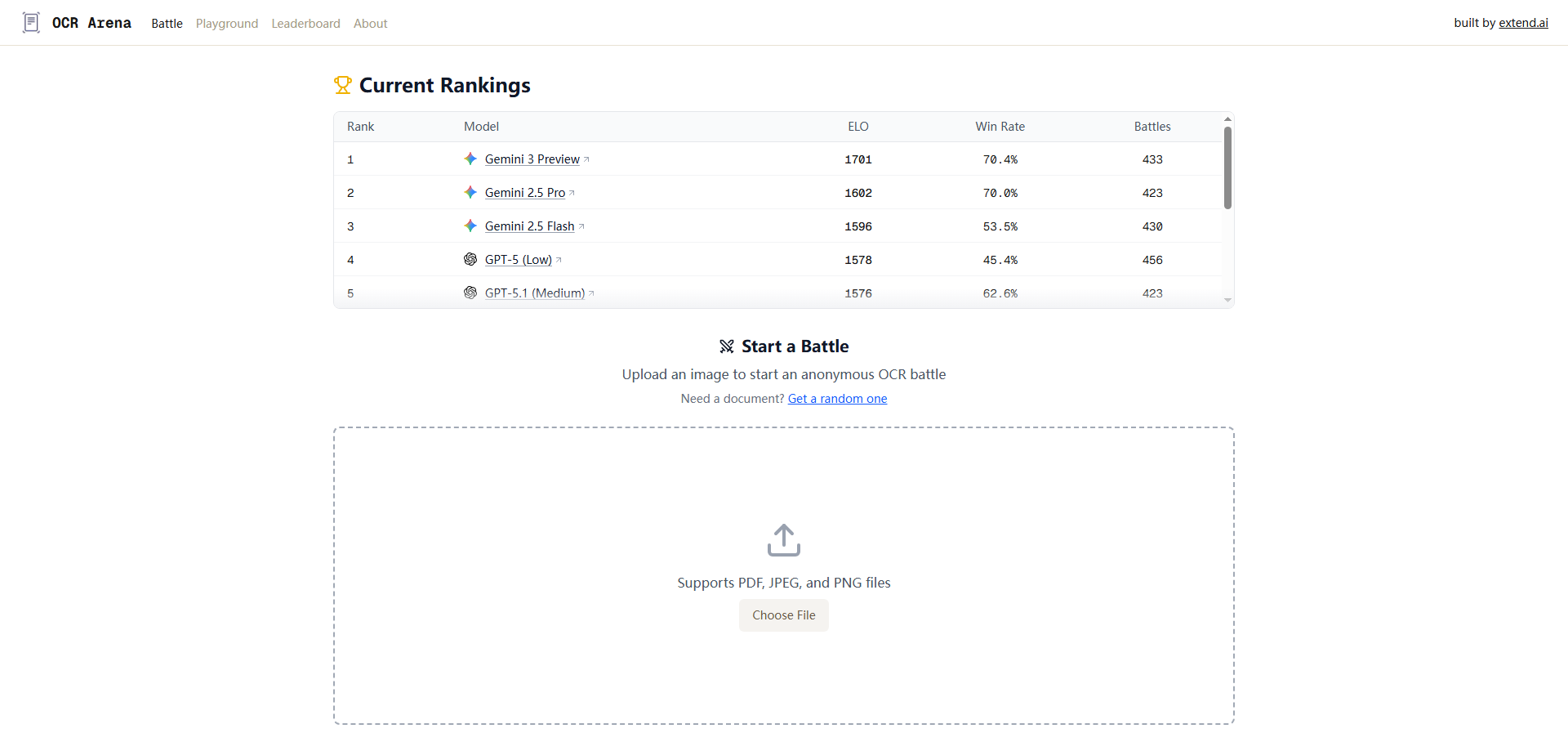

DeepMind reported that Gemini Robotics-ER 1.5 achieved state-of-the-art (SOTA) performance across 15 embodied reasoning benchmarks, including Point-Bench, RefSpatial, and RoboSpatial-Pointing.

These tests cover spatial understanding, 3D reasoning, and visual question-answering, confirming that the model doesn’t just “see” but also “understands space.”

The system’s aggregated results placed it at the top of the leaderboard for embodied AI comprehension—a crucial step toward reliable real-world automation.

Real-World Demonstrations

DeepMind didn’t stop at benchmarks—they showcased Gemini Robotics handling surprisingly human-like tasks, including:

- Checking live London weather before deciding whether to pack a raincoat or sunglasses.

- Searching online for city-specific recycling rules before sorting trash.

- Separating laundry by color to prevent clothing mix-ups.

Each task required context retrieval, step planning, and physical execution, all managed autonomously. This isn’t simple “command-following” AI—it’s autonomous reasoning and action orchestration.

These demos illustrate how Gemini Robotics can bridge digital intelligence and physical agency, making robots not just responsive—but proactive.

From Research to Real-World Integration

As of now, Gemini Robotics-ER 1.5 is available through the Gemini API and Google AI Studio, allowing developers to integrate cognitive reasoning into AI workflows.

Meanwhile, Gemini Robotics 1.5, the action model, is currently in limited release with trusted research and enterprise partners. DeepMind emphasizes a safety-first rollout, given that this model directly controls real-world hardware.

They’ve even introduced an On-Device version, capable of running locally on robots without cloud dependency. While less powerful than the cloud models, it provides secure, offline operations—ideal for safety-critical settings such as healthcare, defense, and home environments.

The Magic of Skill Transfer

One of the most groundbreaking aspects of Gemini Robotics is skill transfer.

In robotics, training every machine from scratch is expensive and inefficient. But Gemini Robotics changes that by enabling cross-platform generalization—a robot trained to pick up objects on one system can perform similar actions on another robot with different hardware configurations.

This approach reduces the traditional data scarcity bottleneck and moves robotics closer to scalable, transferable intelligence—something the industry has been chasing for years.

Why Gemini Robotics Matters

DeepMind Gemini Robotics redefines what AI can be: not just a chatbot or a language model, but an embodied intelligence that connects thought with action.

It represents a new architecture of cooperation:

- ER 1.5 thinks, plans, and searches.

- Robotics 1.5 executes, adapts, and learns.

This synergy could transform industries from logistics to domestic automation. Imagine robots that autonomously plan cleaning routines, manage inventories, or perform repairs while consulting the internet for the latest safety standards.

By combining perception, reasoning, and embodiment, Gemini Robotics brings us one step closer to truly autonomous machines that understand and interact with the world like humans.

Challenges and Limitations

Despite its promise, DeepMind Gemini Robotics is not flawless. Experts caution that several fundamental challenges remain:

- Dexterity and manipulation: Fine motor control for complex hand movements is still limited.

- Safety and robustness: Real-world environments remain unpredictable.

- Pure observational learning: Learning from unlabelled human demonstrations remains difficult.

DeepMind acknowledges these gaps, emphasizing that Gemini Robotics is a milestone, not an endpoint. Large-scale deployment in human spaces will require continued innovation in safety, perception, and adaptability.

A Glimpse Into the Future

Picture this scenario:

- The “brain” (ER 1.5) asks, “What are today’s recycling guidelines and weather conditions?”

- The “body” (Robotics 1.5) responds, “Got it. Sorting waste now and packing your umbrella.”

They communicate naturally, dividing labor seamlessly. The mundane tasks of everyday life—sorting, cleaning, organizing—become automated, consistent, and explainable.

It’s a realistic, incremental step toward the intelligent household and industrial assistant that once lived only in science fiction.

FAQs About DeepMind Gemini Robotics

1. What is DeepMind Gemini Robotics? It’s an AI system that integrates reasoning (Gemini Robotics-ER 1.5) and physical execution (Gemini Robotics 1.5) to enable intelligent, autonomous robots.

2. How does Gemini Robotics-ER 1.5 differ from Gemini Robotics 1.5? ER 1.5 focuses on reasoning, planning, and searching for information, while Robotics 1.5 focuses on vision, motor control, and task execution.

3. Can Gemini Robotics access the internet? Yes. ER 1.5 can search the web in real time for contextual data, such as rules, instructions, or current weather.

4. What is “cross-embodiment learning”? It’s the ability to transfer skills learned on one robot to others—reducing the cost of retraining and enabling shared intelligence.

5. Is Gemini Robotics available to the public? The ER 1.5 model is accessible through the Gemini API, while Robotics 1.5 is currently available only to trusted partners.

6. Can Gemini Robotics operate offline? Yes. An on-device version allows local, offline operation for privacy-sensitive or safety-critical environments.

Conclusion

DeepMind Gemini Robotics marks a turning point in AI development—where thought meets motion, and digital reasoning meets physical capability. By pairing the reasoning power of ER 1.5 with the adaptable execution of Robotics 1.5, Google DeepMind has built a foundation for truly embodied intelligence.

It’s a powerful vision of machines that can think, plan, and act—not as isolated algorithms, but as collaborative partners in the human world.

As DeepMind refines safety and dexterity, Gemini Robotics could redefine our relationship with technology—from controlling tools to collaborating with intelligent companions.